Testing Concepts

A test in our platform tests your AI models for specific aspects that are required to guarantee trustworthy AI e.g. fairness, robustness, security, etc. A single test comes both with continuous (0 - 100) and a binary (passed/failed) score. The performance of the models on these tests is evaluated using metrics which can be configured per test.

Metrics

Metrics are the core evaluation criteria for measuring whether a test has passed or failed. To allow for tailored evaluations based on the use case, each metric comes with specific threshold values (lower and upper threshold) which are used to judge whether the metric is acceptable or not. For more details on how to configure metrics, please refer to the metrics section.

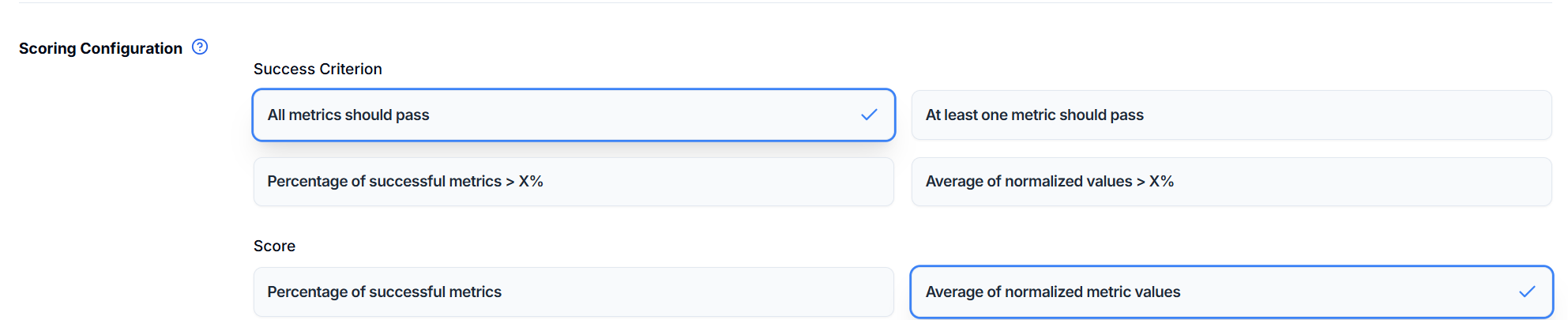

Scoring Configuration

There are multiple ways to evaluate the success of a test:

- All Metrics: All configured metrics must pass their thresholds

- At Least One: At least one metric must pass its threshold

- Percentage Threshold: A specified percentage of metrics must pass their thresholds

- Average Threshold: The average score across all metrics must meet a threshold

The continuous score can be calculated using two methods:

- Percentage Successful: Calculates the percentage of successful metrics

- Average Normalized: Calculates the average of normalized metric scores